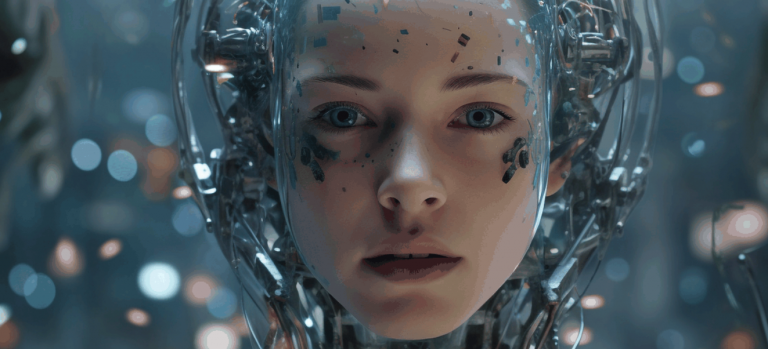

In recent years, generative artificial intelligence (AI) has experienced major advancements, allowing computers to produce and generate materials such as images, videos, text, and even human-like dialogues. While these technology breakthroughs open up new opportunities, they also give rise to ethical concerns.

Understanding Generative AI

Generative AI is a subset of AI that relates to the use of algorithms to produce novel content such as images, videos, text, and sounds.

The most popular examples of Generative AI systems are:

AI for sure can provide many benefits for entrepreneurs, including increased operational efficiency, cost reduction, and limitless creativity. However, organizations have to understand how AI works to choose which jobs it is most suited for. First, people should understand the AI limits so that they do not have any unreasonable expectations.

Benefits of using generative AI in business

Because of its potential to produce unique and innovative products, generative AI has grown in popularity among businesses. One of the most significant advantages of employing generative AI is the ability to save time. Companies are now using it to automate content creation, allowing employees to generate more output within a shorter span of time.

This advantage proves particularly useful for businesses requiring a high volume of creative productions, such as advertising and marketing. The integration of AI is also expected to appear in digital employee experience solutions, particularly in the areas of analytics and forecasting.

GenAI can help businesses reach a higher level of personalization in their materials, while simultaneously saving time and money on their production. These technologies can develop personalized content that is specific to individual consumers’ tastes by evaluating customer data, thereby boosting consumer engagement and happiness.

Challenges of Using AI

The technology’s potential benefits come at a cost. Here are six generative AI concerns that corporate leaders should consider:

Misinformation and manipulation

Generative tools may be used to generate deceptive or false content, resulting in the spread of disinformation. Deepfakes, for example, can produce realistic yet misleading videos or images, giving rise to concerns of trust, credibility, and malicious usage.

To address this, technology solutions such as deepfake detection algorithms and media authentication tools have to be created, and laws to govern the usage and distribution of AI-generated material should be put in place.

Privacy and data protection

To produce high-quality results, generative models frequently require vast volumes of data. When sensitive or personal information is utilized without sufficient authorization, or when created material infringes on persons’ privacy, privacy problems emerge. To ensure ethical use of AI technologies and uphold privacy rights, robust data protection mechanisms, data anonymization, and explicit consent are vital.

Intellectual property rights

The use of AI trained on copyrighted materials can lead to intellectual property concerns.Creating information that violates existing copyrights or patents might raise legal and ethical concerns. Addressing this concern requires training AI on freely available or properly licensed data, as well as adhering to appropriate credit and copyright protocols during content creation and distribution.

Algorithmic accountability and transparency

The internal workings of generative AI can be complicated and opaque, making it difficult to comprehend how they produce their results. Transparency raises questions about responsibility and possible biases. Enhancing the clarity and usability of AI models can help alleviate these concerns, and efforts can be made to develop methods for providing insights into model decision-making.

Unintended consequences and unforeseen uses

Unintended consequences might occur when generative AI technology is employed for purposes other than those intended. Chatbot models, for example, may engage in destructive or abusive dialogues as a result of biased or improper training data. Continuous monitoring, assessment, and rigorous ethical rules can aid in the detection and prevention of such unexpected outcomes. Consider the possible dangers and social implications of generative AI systems during their development and implementation.

Social and economic disruption

Generative AI has the potential to disrupt a wide range of sectors, possibly displacing workers or increasing existing imbalances. To guarantee a just and equitable transition, ethical concerns include limiting negative social and economic repercussions through responsible deployment, reskilling and upskilling initiatives, and society discourse on the ethical use of AI.

Solutions and Strategies

https://pixabay.com/ru/illustrations/%D0%B1%D0%B8%D0%B7%D0%BD%D0%B5%D1%81-%D0%B8%D0%B4%D0%B5%D1%8F-%D1%80%D0%BE%D1%81%D1%82-%D0%B1%D0%B8%D0%B7%D0%BD%D0%B5%D1%81-%D0%B8%D0%B4%D0%B5%D1%8F-3189797/

Despite the challenges faced by businesses adopting generative AI, several methods can be implemented to overcome them:

Robust data governance

To secure privacy and limit the potential exploitation of personal data in AI models, implement robust data governance procedures such as data anonymization, permission management, and frequent audits.

Explainability and transparency

Create interpretability strategies and tools that help users understand and explain how generative AI models generate their outputs. Transparency improves accountability and makes it easier to identify and mitigate biases or unexpected outcomes.

Collaboration and multi-stakeholder engagement

Engage in multi-stakeholder partnerships with researchers, policymakers, industry professionals, and the public to create standards, policies, and legislation governing the ethical use of generative AI technology.

Continuous monitoring and evaluation

Monitor and analyze AI models regularly to uncover any biases, unexpected effects, or abuse. Implement feedback loops and accountability procedures to ensure continuous development and accountability.

Public awareness and education

Increase public knowledge and awareness of generative AI technologies, their potential, and their ethical implications. Educational programs can assist individuals in recognizing and critically evaluating AI-generated material.

Regulatory frameworks

Create regulatory frameworks and rules for generative AI technology that address issues such as disinformation, intellectual property rights, and ethical use. These frameworks should strike a balance between innovation and ethical concerns.

Conclusion

We can ensure the responsible development, deployment, and usage of generative AI technologies by examining the ethical implications and adopting suitable solutions, creating trust, justice, and beneficial social effect. To fully realize the promise of generative AI responsibly and sustainably, it is critical to strike a balance between innovation and ethical considerations.